Artificial intelligence is abuzz with the launches of two advanced large language models giants (LLMs): Google DeepMind’s Gemini 1.5 Pro and OpenAI’s GPT 4o. These next-generation models promise significant advancements in how computers process and generate human language.

While specific details remain under wraps, here’s a deep dive into what we know so far about these exciting developments:

Hello GPT 4o

OpenAI hasn’t revealed many specifics about GPT 4o on its twitter account, but here are some potential areas of focus based on previous iterations and ongoing research trends:

Advanced Conversational Abilities: ChatGPT has garnered recognition for its engaging and human-like conversational style. This version might push this further, potentially incorporating emotional intelligence and context-awareness for more natural and engaging interactions. In addition, it has the ability to detect your moods as well as mimic them.

“This new version LLM has the ability to generate content with the command of audio, video, or text and comes under “Natively Multimodel.”

– Sam Altaman, OpenAI CEO

Enhanced Creative Text Formats: OpenAI has shown a strong interest in exploring the creative potential of LLMs. GPT 4o might excel at generating different creative text formats like poems, code, scripts, musical pieces, or even email marketing content in various tones and styles, i.e., humanly-written.

Focus on Developer Integration: Given OpenAI’s approach of offering API access to its models, there’s a chance that GPT 4o will be designed with developer needs in mind, potentially offering improved integration tools and functionalities.

“The updated model is much faster and improves capabilities across text, vision, and audio. The model will be free for all users, while paid users will continue to have up to five times the capacity limits of free users.“

By Mira Murati, OpenAI CTO

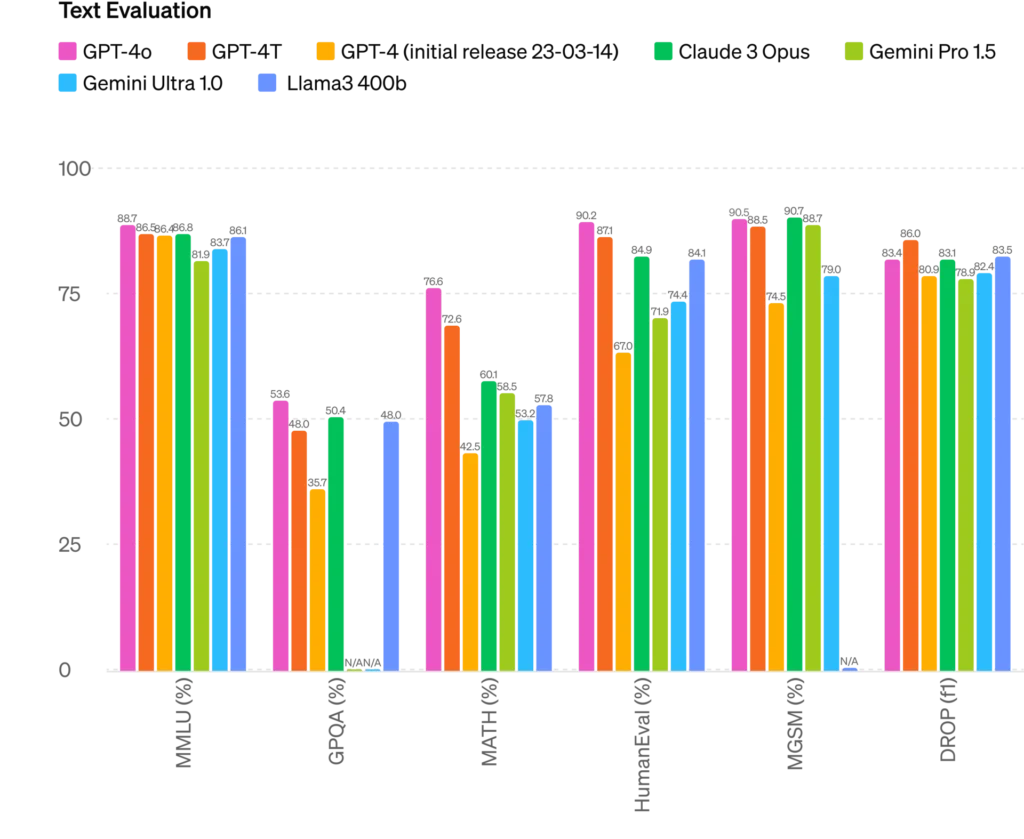

The improved version of all LLM when compared GPT 4o is the highest with 88.7% in general knowledge questions.

Safety and Limitation

GPT-4o has safety measures in place to reduce risks like cyber threats and biased outputs. This includes filtered training data and post-training adjustments. They assess these risks and involve external experts to identify potential issues. While audio features are coming soon, for now, text and image inputs with text outputs are available. The model has limitations across all modalities, which they’ll continue to address.

You can check the Demo and Introduction of GPT4.o

Availability for the users

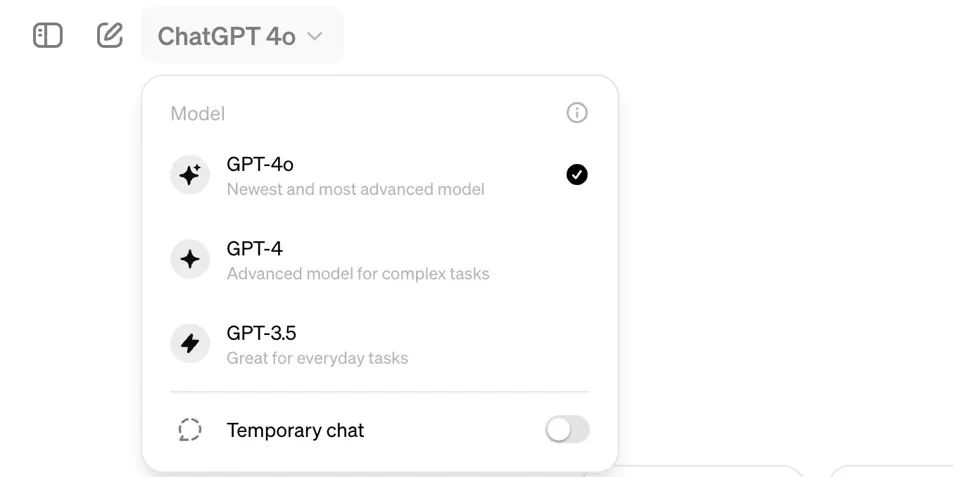

OpenAI is making GPT-4-level AI more accessible with GPT-4o. It’s faster, cheaper, and has higher limits than previous models. Text and image features are available now in the free tier of ChatGPT and the Plus tier with increased message limits.

Voice mode with GPT-4o is coming soon to Plus. Developers can access text and vision features through the API. Audio and video access will be limited to partners initially. Overall, the complete version of ChatGPT will be accessible even for free users in the coming few weeks.

Also read: How to access GPT4o with ChatGPT free tier?

NOTE: As mentioned above in “Availability to Users,” the rollout is still rolling out quite slowly for desktop and mobile phones. You may not find it yet in your free tier account, but yes, you’ll get it in your free tier’s “advanced version drop-down menu” once OpenAI completes its rollout.

Gemini 1.5 Pro

After previously- launching Gemini models, Google DeepMind’s thought thought to build upon the success of its new launch, i.e., Gemini 1.5 Pro. Let’s have an overview of how it boasts significant enhancements in several key areas:

Enhanced Factual Language Understanding: Leveraging Google’s vast knowledge base and search capabilities, Gemini 1.5 Pro is expected to excel at tasks requiring factual accuracy and information retrieval. Researchers suggest it might be particularly adept at summarizing complex topics or generating reports based on factual data.

Improved Reasoning and Inference: Early hints suggest that Gemini 1.5 has a stronger ability to reason and draw inferences from the information it processes. This could lead to more subtle and insightful responses to complex questions.

Focus on Accessibility: While details are limited, there’s a possibility that Google might prioritize making Gemini 1.5 Pro more accessible to a wider range of users through potential integrations with existing Google products or services.

Here’s the note from Google CEO Sunder Pichai about Gemini 1.5 Pro Version

The building of this new advanced version of LLM is made by a new Mix-of-Experts architecture to serve and train the model better.

You can try the advanced version of Gemini to get an overview of its new helpful features.

Final Verdict!

The launch of Gemini 1.5 Pro and GPT 4o marks a significant step forward in the evolution of LLMs. Their capabilities have the potential to transform how we interact with computers, access information, and express ourselves creatively.

Meanwhile, you can also get an overview of other news or resources about the tech world.