The atmosphere is echoing that Liquid AI’s first series of Gen AI models is outperforming other LLMs.

Let’s have an overview of what’s special about this new generative AI innovation…

Liquid AI, an MIT Spin-off is a foundation model company, that proudly announces the launch of its latest AI models, known as “Liquid Foundation Models (LFMs),” that are set to redefine how generative AI operates.

The models are in three sizes and it’s a non-transformer model. Without using the current architecture these LFMs compete with other AI language models.

Furthermore, these models are specifically designed to compete with traditional large language models (LLMs) like GPT, Gemini, Gemma, and early benchmarks show that they may even outperform their older counterparts.

What Makes These Generative AI Models Unique?

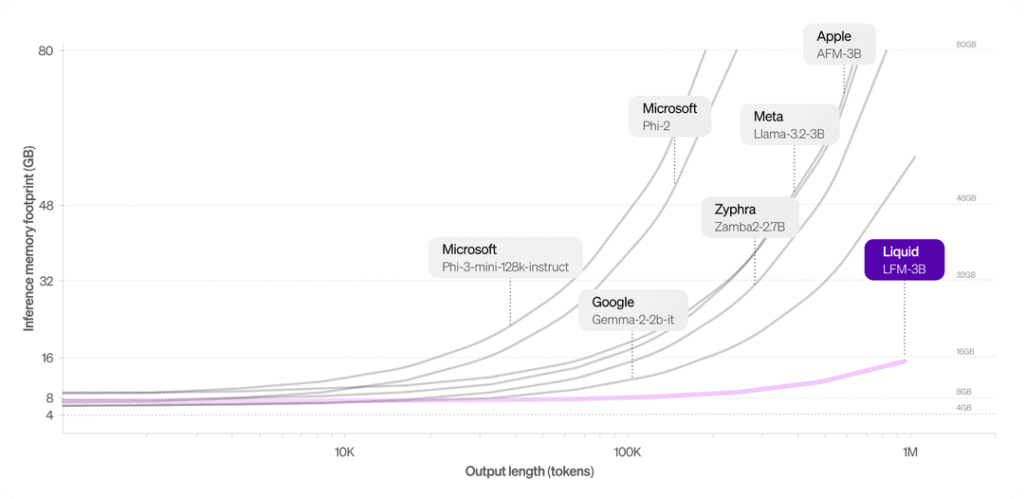

LLMs ability to process large amounts of text with fewer computational resources. For instance, the LFM-3B and LFM-40B models use fewer memory resources than larger models, allowing them to deliver the same or better performance.

This means that tasks like text summarization, chatbot conversations, and document processing can be done more efficiently using these new AI training models.

The innovative structure of these LFMs allows them to maintain context over long periods, which is a significant challenge in AI language models.

They excel at retaining information over extended conversations or long documents, making them suitable for real-world applications like customer service bots, mobile apps, and even edge computing devices.

What Language LFMs Excel At Today?

Language LFMs are proficient in delivering broad and specialized knowledge across various topics. They handle mathematical problems and logical reasoning with considerable accuracy.

Moreover, their efficiency shines in tasks involving long-context scenarios, allowing them to maintain coherence and relevance over extended pieces of text.

English is their primary language, but they also support multiple other languages, including Spanish, French, German, Chinese, Arabic, Japanese, and Korean, making them versatile for multilingual applications.

Current Limitations of Language LFMs

Despite their strengths, Language LFMs struggle with certain tasks. For instance, they are less effective in zero-shot coding challenges and can encounter issues when performing precise numerical calculations.

They also fall short when handling real-time, time-sensitive information and struggle with simple but specific tasks, such as counting letters in words.

Additionally, the application of human preference optimization techniques remains relatively limited, which could enhance the models’ ability to align better with human expectations and preferences.

What is the Future of Liquid Foundation Models?

Liquid AI’s LFMs also pave the way for future innovations, as the company aims to expand its use to other fields like video, audio, and time-series data.

This versatility highlights the growing impact of generative AI in various sectors, and the potential of these new AI models to shape future developments.

Bottom Line!

In summary, Liquid AI’s latest AI language model advancements, specifically with the introduction of LFMs, are poised to challenge industry leaders in the generative AI space. These AI models offer efficient, high-performance solutions across industries, making them a key player to watch in the evolution of AI training models.

Subscribe to our newsletter for the latest breakthroughs and exclusive insights into cutting-edge innovations.